In software development, system testing can identify issues with the software, but is often an expensive process. We've been working on a tool that lets you capture data from system tests to automatically generate unit tests that test software functions in an equivalent way. This could be used to minimize the engineering effort needed to test software functionality across the software development life cycle.

Software system tests are often used to identify issues with the tested software. These tests, which often involve manual processes by test engineers, are effort-intensive to run. Lower level tests such as unit tests typically require less effort to run, but often must be written by hand.

We've been working on a tool that lets you automatically generate unit tests that test software in an equivalent way to system tests. As the unit tests are automatically generated and easier to run, this means that you can save a huge amount of time by not needing to rerun your system tests every time you change your code.

How does it work?

To capture values from system tests, RVS statically analyzes the source code and instruments it so that it can observe the inputs and outputs of functions, whether they are parameters or global variables. This allows it to follow pointers, and unroll any arrays and structures needed to understand the program behavior. It can even detect ‘volatile’ results (such as the creation of a file handle or a malloc pointer).

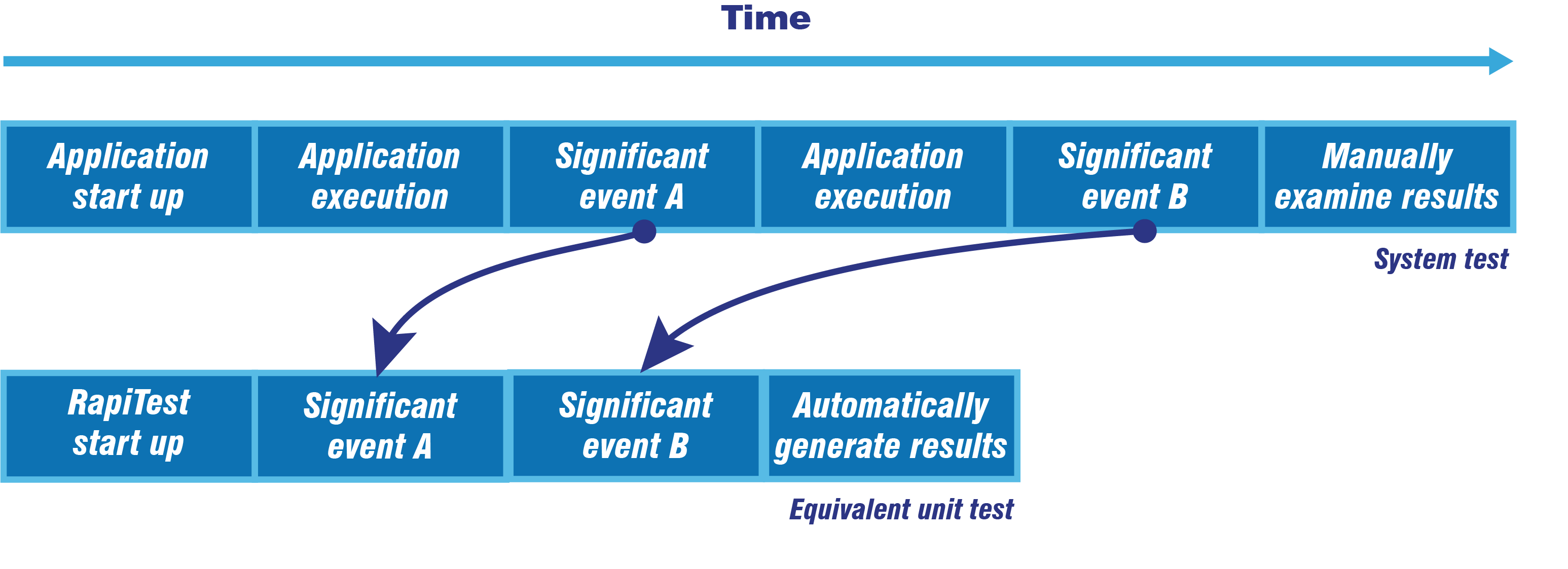

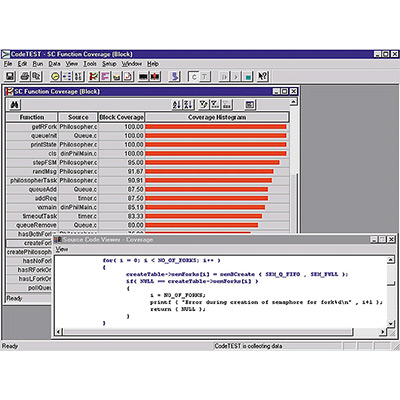

Then, when the system test is run, RVS captures significant events or "highlights" that you can play back as stand-alone unit tests (Figure 1). System tests may observe dozens of significant events for a specific function over the course of minutes or even hours, while a unit test will execute significant events end-to-end, significantly reducing the time needed to test for them.

After capturing data from the system test, you can select functions for which to create unit tests. RVS will then look at the captured system test data including observed input and output parameters for the function, global state changes and the call-stack, and will generate RapiTest unit tests that mimic the behavior observed during the system test.

When generating tests for a function, you can select which of the observed values to use as criteria for generating new unique tests. For example, you can configure tests to be automatically generated based on differences in coverage, the data being fed to the function, the global state, or the underlying call-stack of the function.

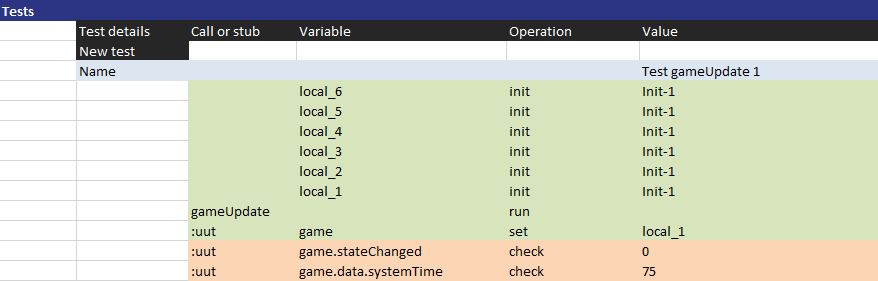

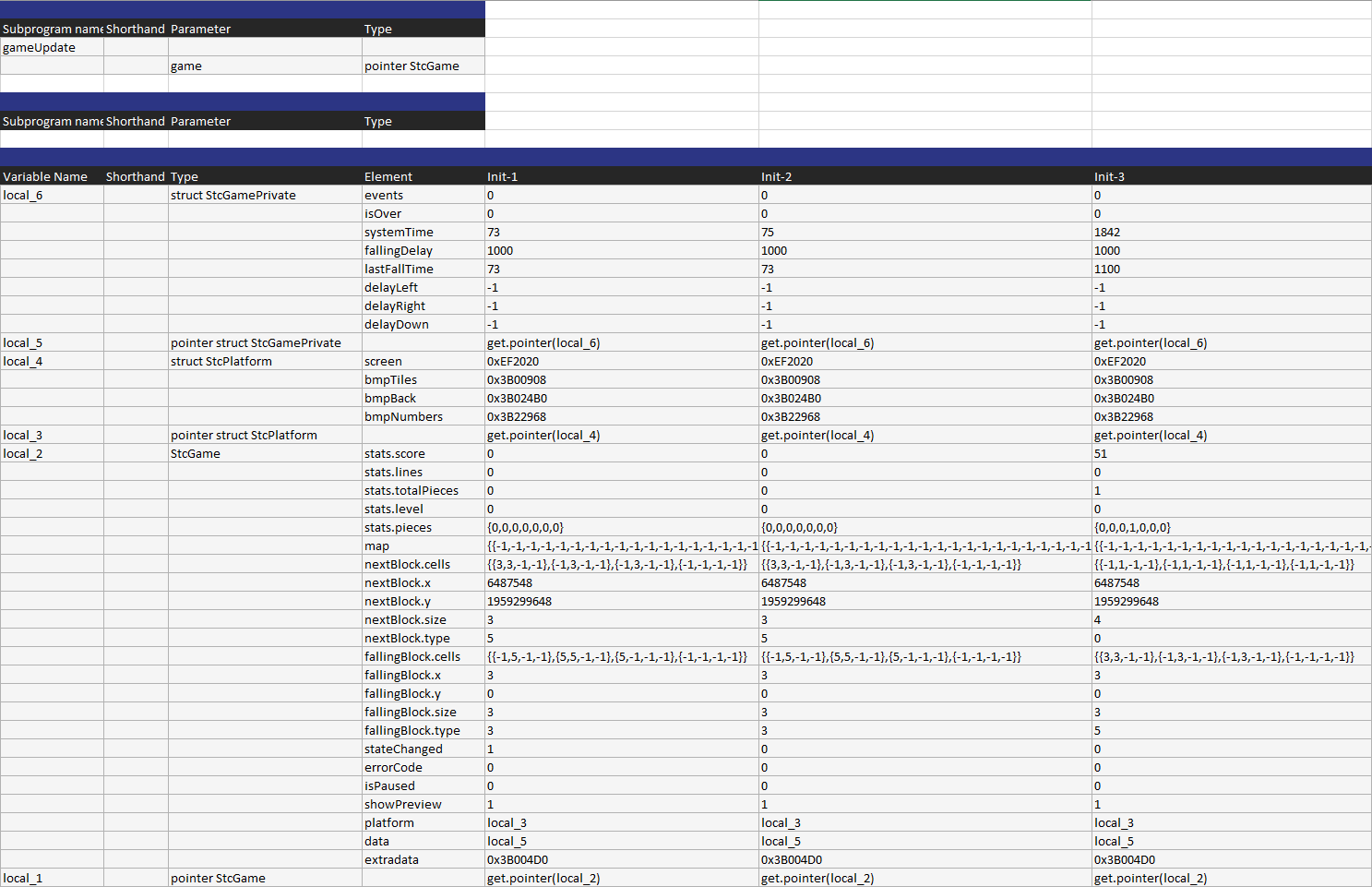

The tests generated by RapiTest can then be run through an automated environment. Figure 2 shows part of a test spreadsheet generated from system tests, where the criteria for each "unique" test were the data passed to the function under test.

Selecting acceptance criteria

For a test to be meaningful, it must include acceptance criteria. These depend on the test, the application, and the environment in which the test is run. Some tests, when executed, will pass merely if the function under test exits without error, while others may be much more complex.

When capturing the data for a function, RVS observes the inputs, outputs, global state and call-stack of functions, and their timing and coverage behavior. When generating unit tests for a function, you can select which of the observed values to use as acceptance criteria for your tests. In testing terms, this could be considered to be "oracle" testing – if the system test RVS captured results from worked when some input produced the observed output, the unit test should ensure that those inputs consistently produce the same outputs every run.

Figure 3 gives an example of automatically generated test acceptance criteria. This test automatically checks changes in values when the test function is executed with a specific input pattern.

Running the tests

While the unit tests generated by RapiTest are not traceable to requirements, they could let you take that three-hour system test that you have to run every release, extract the "best bits" of it to generate a few milliseconds' worth of unit tests, and run them as regression tests on a continuous integration server. This would let you, for example, set up regression tests for software functionality you've already completed. If any changes to your system cause your software's functionality to change, your regression tests would automatically flag these for your attention, letting you avoid the risk of nasty surprises towards the end of your testing cycle.

This technology offers a flexible approach to testing throughout development and could be used in many ways. It can be combined with other test-generation facilities such as bounds checking, fuzz testing and auto-generation for coverage (up to MC/DC) to provide a robust verification layer before formal testing even begins, greatly reducing the cost of testing.

We plan to release this technology officially in a future version of RVS. In the meantime, if you want more information about it, or have any ideas on how you would use the technology, contact us.

Rapita System Announces New Distribution Partnership with COONTEC

Rapita System Announces New Distribution Partnership with COONTEC

Rapita partners with Asterios Technologies to deliver solutions in multicore certification

Rapita partners with Asterios Technologies to deliver solutions in multicore certification

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

RVS gets a new timing analysis engine

RVS gets a new timing analysis engine

How to measure stack usage through stack painting with RapiTest

How to measure stack usage through stack painting with RapiTest

What does AMACC Rev B mean for multicore certification?

What does AMACC Rev B mean for multicore certification?

How emulation can reduce avionics verification costs: Sim68020

How emulation can reduce avionics verification costs: Sim68020

How to achieve multicore DO-178C certification with Rapita Systems

How to achieve multicore DO-178C certification with Rapita Systems

How to achieve DO-178C certification with Rapita Systems

How to achieve DO-178C certification with Rapita Systems

Certifying Unmanned Aircraft Systems

Certifying Unmanned Aircraft Systems

DO-278A Guidance: Introduction to RTCA DO-278 approval

DO-278A Guidance: Introduction to RTCA DO-278 approval

NXP's MultiCore for Avionics (MCFA) Conference

NXP's MultiCore for Avionics (MCFA) Conference

Embedded World 2026

Embedded World 2026

XPONENTIAL 2026

XPONENTIAL 2026

DO-178C Multicore In-person Training (Heathrow)

DO-178C Multicore In-person Training (Heathrow)