Execution time and response time are two concepts which are sometimes mistakenly conflated. In this post, I will make the distinction between the two clear and explain why both concepts are important in real-time embedded systems design.

First, some terminology. A task is a piece of code that is to be run within a single thread of execution. A task issues a sequence of jobs to the processor which are queued and executed.

The time spent by the job actively using processor resources is its execution time. The execution time of each job instance from the same task is likely to differ.

Common sources of variation are path data dependencies (the path taken through the code depends on input parameters) and hard-to-predict hardware features such as branch prediction, instruction pipelining and caches.

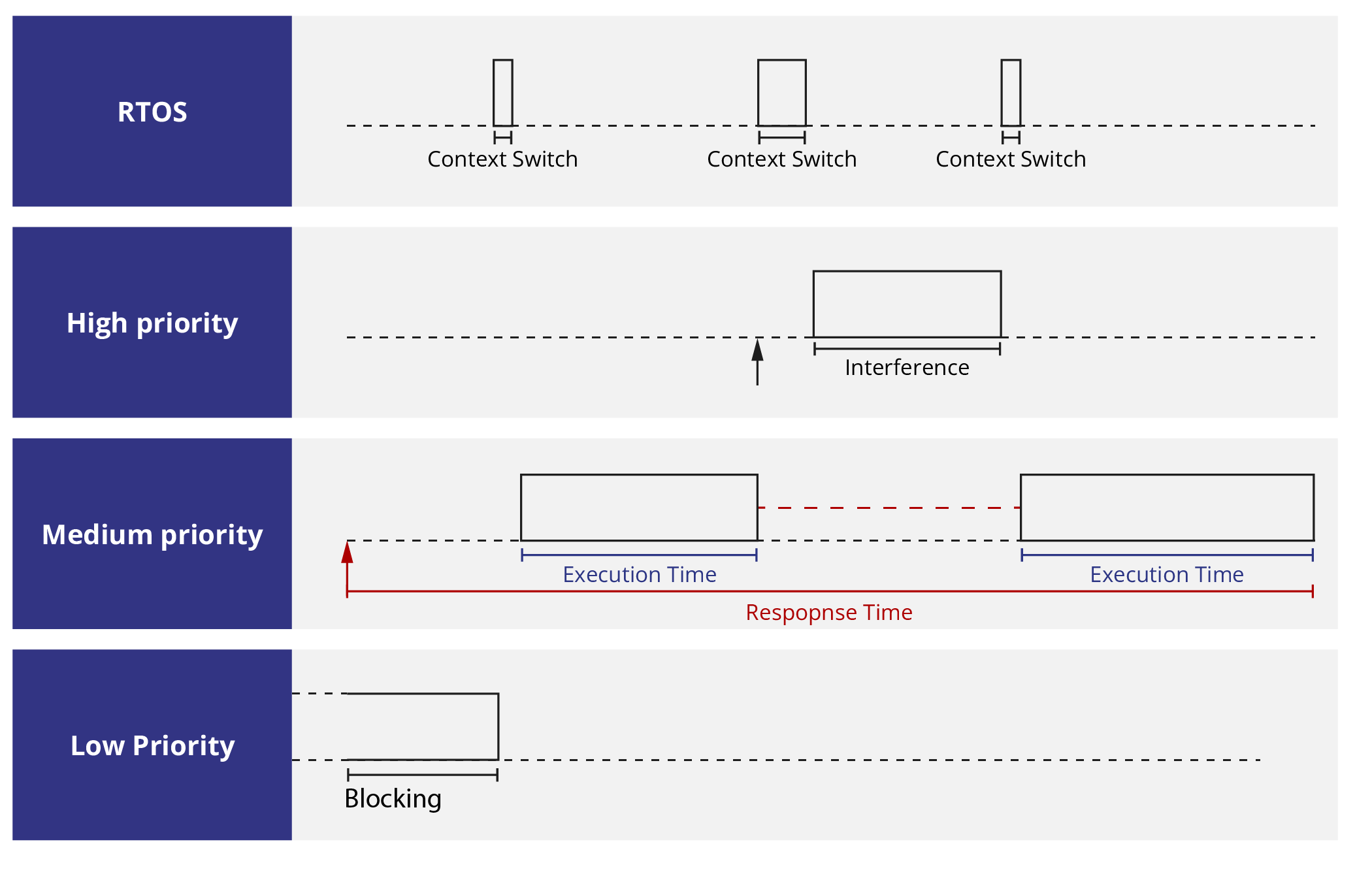

The response time for a job is the time between when it becomes active (e.g. an external event or timer triggers an interrupt) and the time it completes. Several factors can cause the response time of a job to be longer than its execution time - Figure 1 shows some of these:

In Figure 1, jobs in the queue are scheduled using fixed priority pre-emptive scheduling. Execution and response times are shown for the medium priority job. The real-time operating system (RTOS) scheduler always selects the highest priority job that is ready to run next. A job is suspended if a higher priority one becomes active, and resumes after all higher priority jobs have completed.

A lower priority job can also prevent a job from running if it locks a shared resource before the higher priority job does. This is called priority inversion. RTOS overheads for context switches and pre-emptions will also delay a job. These may be very small with appropriate hardware support. Release jitter caused by insufficient clock granularity is another source of delay (not shown above) [1].

Both execution times and response times are of interest to real-time systems designers. This is usually in the context of worst-case execution times (WCETs) and worst-case response times (WCRTs). High level system requirements will specify maximum response times for a task, known as a deadline. WCRTs are calculated using response time analysis, which takes WCETs and a scheduling policy as inputs. This may lead to execution time budgets and a scheduling policy being derived as lower level requirements.

Being able to measure response times and execution times individually is important. If response times are measured but execution times are not, then it is not possible to perform worst-case response time analysis. This runs the risk of the system missing a deadline because a particular job sequence / job execution time combination was not encountered in testing. Response time measurement data is still useful, however, for knowing how close jobs are to missing deadlines.

And finally… in certain circumstances execution time increases may even lead to response time decreases! Using our diagram once again, imagine that a previous job of the high priority task ran until just after the activation of the medium priority task. The low priority task which blocked the medium priority one would not be allowed to execute, allowing the medium priority task to both start and complete earlier. We suggest Baruah and Burns' paper[2] on sustainable scheduling analysis as further reading.

[1] Neil Audsley, Iain Bate and Alan Burns, "Putting fixed priority scheduling theory into engineering practice for safety critical applications", In Proceedings of the 2nd IEEE Real-Time Technology and Applications Symposium (RTAS '96), pages 2-10, 1996.

[2] Sanjoy Baruah and Alan Burns, "Sustainable Scheduling Analysis", In Proceedings of the 27th IEEE International Real-Time Systems Symposium (RTSS 2006), pages 159-168, 2006.

Rapita System Announces New Distribution Partnership with COONTEC

Rapita System Announces New Distribution Partnership with COONTEC

Rapita partners with Asterios Technologies to deliver solutions in multicore certification

Rapita partners with Asterios Technologies to deliver solutions in multicore certification

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

How to measure stack usage through stack painting with RapiTest

How to measure stack usage through stack painting with RapiTest

What does AMACC Rev B mean for multicore certification?

What does AMACC Rev B mean for multicore certification?

How emulation can reduce avionics verification costs: Sim68020

How emulation can reduce avionics verification costs: Sim68020

Multicore timing analysis: to instrument or not to instrument

Multicore timing analysis: to instrument or not to instrument

How to achieve multicore DO-178C certification with Rapita Systems

How to achieve multicore DO-178C certification with Rapita Systems

How to achieve DO-178C certification with Rapita Systems

How to achieve DO-178C certification with Rapita Systems

Certifying Unmanned Aircraft Systems

Certifying Unmanned Aircraft Systems

DO-278A Guidance: Introduction to RTCA DO-278 approval

DO-278A Guidance: Introduction to RTCA DO-278 approval

HISC 2025

HISC 2025